Google DeepMind Unveils Genie 2: A Breakthrough in AI Training with 3D World Generation

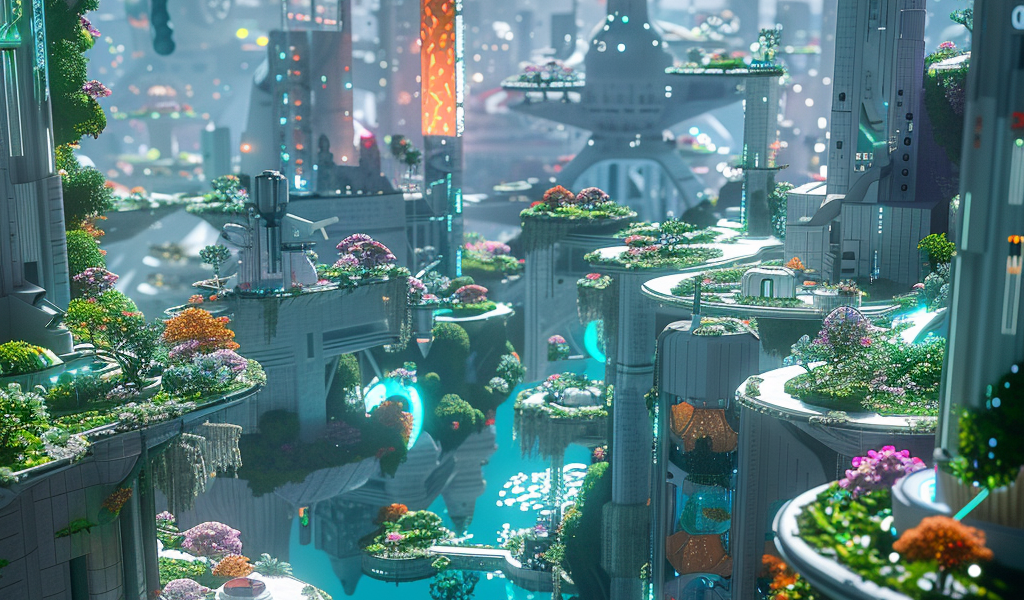

In a groundbreaking development in the field of artificial intelligence, Google DeepMind has unveiled Genie 2, a sophisticated foundation world model that revolutionizes the way AI agents are trained. This innovative model is capable of generating an endless variety of action-controllable, playable 3D environments from just a single prompt image. This advancement not only enhances the training process for both human and AI agents but also opens new avenues for creative workflows in interactive experiences.

Games have long been a cornerstone of AI research, serving as engaging platforms for testing and advancing AI capabilities. From the early days of exploring Atari games to the remarkable achievements of AlphaGo and AlphaStar, Google DeepMind has consistently leveraged the unique challenges posed by gaming environments. These platforms provide measurable progress and a safe space to explore the complexities of AI development.

Genie 2 significantly expands the potential for training general embodied agents, overcoming the limitations that have historically hindered the availability of diverse training environments. With this new model, future agents can be trained and evaluated in a virtually limitless curriculum of novel worlds, enhancing their adaptability and effectiveness in real-world applications.

One of the standout features of Genie 2 is its rapid prototyping capability, allowing developers to deploy agents in various world models seamlessly. This flexibility is crucial for researchers and developers alike, as it promotes efficient experimentation and innovation in creating interactive experiences.

Unlike its predecessor, Genie 1, which primarily focused on generating 2D environments, Genie 2 represents a significant leap in complexity and versatility. It is designed to simulate rich 3D worlds, incorporating the consequences of actions such as jumping or swimming, thereby creating a more immersive training experience for agents.

Genie 2 has been trained on a vast video dataset, enabling it to exhibit emergent capabilities that enhance its functionality. These capabilities include complex character animations, physics simulations, and the ability to model and predict the behavior of other agents within the environment. This level of sophistication allows for more realistic interactions and scenarios, further pushing the boundaries of what AI can achieve.

To demonstrate the capabilities of Genie 2, Google DeepMind has released several example videos showcasing human interactions with the model. Each video illustrates how the model responds to a single image prompt generated by Imagen 3, the state-of-the-art text-to-image model developed by Google DeepMind. This interaction highlights the seamless integration of visual inputs and AI responses, showcasing the potential for creating dynamic and engaging environments.

The introduction of Genie 2 marks a pivotal moment in AI research, particularly in the realm of training embodied agents. By providing a foundation for generating diverse and complex 3D worlds, Genie 2 not only enhances the training process but also paves the way for innovative applications across various fields. As AI continues to evolve, the capabilities offered by Genie 2 are expected to play a crucial role in shaping the future of intelligent systems.

In summary, Genie 2 stands out as a remarkable advancement in AI technology, offering unprecedented opportunities for training and evaluating agents in interactive environments. Its ability to generate a wide range of rich 3D worlds from simple prompts is set to transform the landscape of AI research and application, fostering creativity and innovation in the development of intelligent systems.