Apple has introduced its latest multimodal machine learning model, Feret 7B, marking a significant advancement in the company’s AI development efforts. This new model is poised to enhance services like Siri and demonstrates Apple’s commitment to advancing AI capabilities across its products.

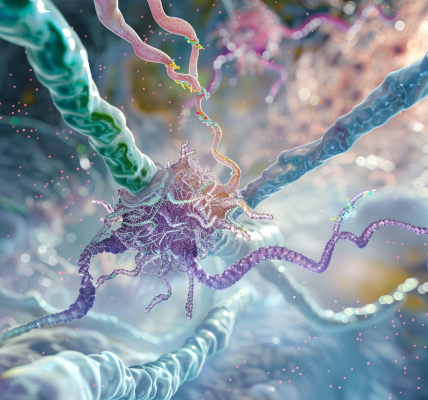

At its core, Feret is a large language model with the capability of understanding visual inputs paired with text prompts, a process known as ‘grounding’. This enables interaction with images in context, such as specifying parts of an image to condition responses. While Feret was open-sourced in October, it has only recently become fully available, showcasing Apple’s progress in multimodal AI.

As Apple continues to lead in on-device machine learning, advancements in iOS are expected to translate to MacOS, given that both platforms leverage Apple silicon. With tools like MLX, more models can run efficiently on Apple chips. Feret is likely intended for future iOS devices, with more powerful iterations potentially targeted for Macs.

Compared to Siri’s limited abilities, Feret represents a significant leap forward. With quantized models like 7B, Apple may soon have AI capabilities that rival large models like GPT-5, but optimized for mobile. This could potentially lead to substantial improvements in iOS 18.

Feret was trained using datasets enhanced by Apple, focusing on grounding knowledge and understanding visual relationships. It utilizes a benchmark created to showcase its strengths, similar to practices adopted by other companies for their models. The training process made use of Nvidia GPUs, which aligns with Apple’s compute limitations.

Notably, Feret borrows hyperparameters from Anthropic’s Claude, indicating Apple’s utilization of existing research. By building on top of Anthropic’s base model, Apple demonstrates its willingness to collaborate with partners, further validating Anthropic’s capabilities.

Feret’s training allows for the creation of smaller or larger versions targeting different Apple devices. The 7B parameter model appears to be tailored for iOS, while the 13B model may be more suitable for Macs. Apple’s incremental, multi-year AI cycles are expected to drive rapid improvements across its product lines.

Under the hood, Feret incorporates visual sampling to understand relationships in images, enabling segmentation and subject identification, features that are already present in Apple’s on-device APIs. Apple’s practical approach is focused on near-term utility over pure research.

Testing has demonstrated Feret’s impressive multimodal abilities, showcasing real progress in contextual visual understanding. This milestone underscores Apple’s dedication to leading in AI and its commitment to advancing multimodal AI across its product range.